Designer-Generative AI Ideation Process: Generating Images Aligned with Designer Intent in Early-Stage Concept Exploration in Product Design

Abstract

Background With generative artificial intelligence (AI) finding active application in the creative fields, there is a surge in experiments aimed at incorporating Generative AI tools into the design ideation phase for product planning. Therefore, this study aims to propose a designer-Generative AI product design ideation process and to conduct workshops to validate its effectiveness.

Methods The designer-Generative AI product design ideation process, developed as a departure from traditional design processes, focuses on delineating and synergizing the roles of designers and Generative AI. The model was concretized through a workshop with college students, involving six participants. Subsequently, the efficacy and limitations of the model were assessed through interviews.

Results In the Writing Prompts and Result Analysis stage, the application of Generative AI for categorized keyword counting and the extraction of unknown keywords, followed by iterative testing of semantically similar keywords, facilitated precise keyword specification. In the Iterative Image Exploration stage, employing various prompting techniques enabled the generation of images centered on product focuses or contextual themes as needed.

Conclusions The model and workshop demonstrated Generative AI’s effectiveness in both idea generation and prompt refinement, emphasizing the growing importance of designers’ skill in prompt crafting, yet faces challenges in depicting novel usability in images, requiring external tools for precise design modifications.

Keywords:

Generative Artificial Intelligence, Ideation, Product Design, Prompt Crafting1. Introduction

With the advancement of Artificial Intelligence (AI) technology, Generative AI tools are increasingly being utilized across various fields. Notably, the exploration of Generative AI in creative disciplines such as art, music, and literature is already in vigorous progress (Chung, 2022; Wu et al., 2021). Generative AI systems are instrumental in enhancing human creativity and unveiling new prospects within the design area. Muller et al. (2022) introduced the GenAICHI framework, aimed at facilitating synergistic collaborations between humans and Generative AI in design tasks. Sbai et al. (2019) underscored the capacity of generative networks to inspire designers. Cai et al. (2023) devised the DesignAID tool to stimulate creativity and facilitate cooperative endeavors with AI throughout the design process. Koch et al. (2019) investigated the application of Collaborative Contextual Bandits for generating design ideas, demonstrating the system’s efficacy in aiding human designers. Furthermore, Weisz et al. (2023) highlighted the necessity for establishing universal design principles for Generative AI, offering guidelines from a computer science perspective.

These studies highlight the significant impact of Generative AI on the design industry. In corporate product design, as demonstrated by AUDI’s use of its AI software, FelGAN, for rim design inspiration (Geyer, 2022), and IKEA’s experimentation with Generative AI in furniture design (Roettgers, 2023), Generative AI tools are being explored as a means for product planning, with ongoing experiments aimed at integrating these tools into the decision-making process. Therefore, this research aims to propose a Designer-Generative AI Product Design Ideation Process, along with a method for structuring prompts throughout the planning and ideation stages. This method is crafted to offer designers a spectrum of inspiration during the ideation phase while ensuring alignment with their envisioned outcomes. Moreover, it seeks to identify the advantages offered by collaboration with Generative AI and delineate its limitations and additional functionalities or processes required.

To achieve the objectives of this research, a comprehensive analysis was undertaken to understand the potential contributions of Generative AI at the ideation phases of the design process, considering the constraints identified in prior studies. From this examination, a Designer-Generative AI Product Design Ideation Process was proposed and implemented in a workshop designed for graduate students. The participants engaged in gathering design cases, generating keywords via Generative AI, organizing these keywords on a positioning map, formulating prompts, and generating images. This process advanced to the stage of refining the design concept, facilitated by the creation of concept sketches derived from the iterative exploration of images.

2. Related work

2. 1. Human-AI Collaborative Ideation in Design Process

To elucidate the role of Generative AI tools in design ideation, prevalent tools in current use were examined. Post-its are widely used in various activities such as brainstorming and affinity diagrams to generate creative ideas. This tool facilitates the visual organization of similar concepts and connections, thereby promoting the free exchange of ideas among team members and enhancing collaborative efficiency (Straker, 2009). Recently, digital whiteboards such as Miro and Mural have gained popularity for ideation, augmenting the benefits of traditional methods. These tools enable extensive collaboration in remote settings, unbounded by physical limitations. A key advantage of these tools is their capacity to share diverse types of data, including text, images, and videos, in real-time, significantly benefiting the ideation process (Crisp, 2021).

As diverse Generative AI rapidly proliferate, research increasingly focuses on exploring various prompt configurations to efficiently explore a broad spectrum of ideas during ideation. Chiou et al. (2023) adopted the annotated portfolios method introduced by Bill Gaver and John Bowers (2012) to overcome the gap between a designer’s thoughts and images generated by Generative AI during co-creation. This approach proved to be effective in enhancing understanding Generative AI. Liu et al. (2022) systematically observed patterns in various prompt types throughout the creative process and organized them into prompt bibliographies. Shih-Hung Cheng (2023) conducted a study involving the generation of images using 17 distinct prompts, and the prompt implementation was evaluated by seven experts. Furthermore, Joibi et al. (2023) performed Semantic Differential Analysis, categorizing prompts into engineering, product, and affective domains, utilizing insights derived from this analysis to tailor prompts accordingly.

However, as these prompting methods still have limitations in being written and evaluated by humans, research was conducted in the direction of utilizing a method of deriving keywords and composing prompts from a Generative AI perspective to be suitable for a new ideation method.

3. Integrating Image Generative AI into the Product Design Process

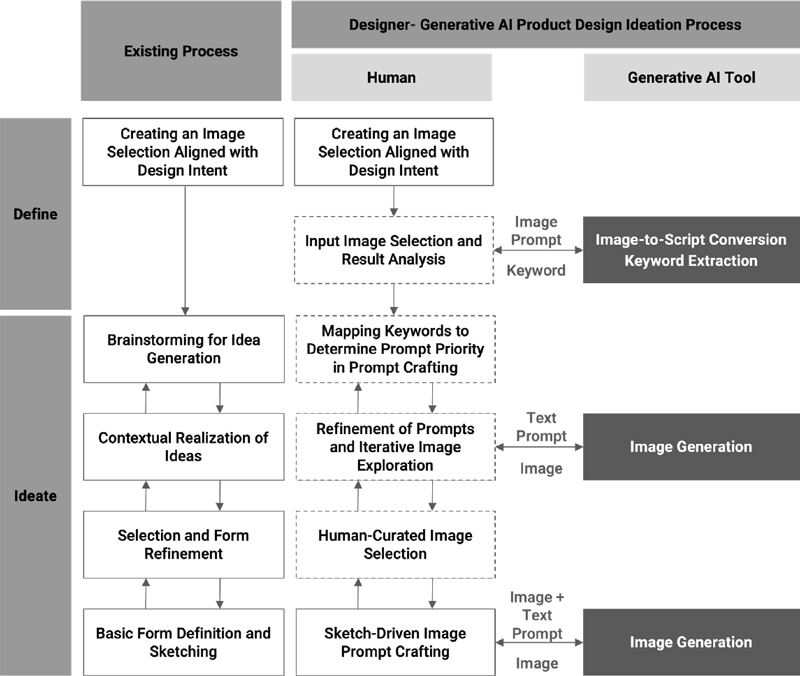

To elucidate the roles of designers and Generative AI tools in the initial stages of the design process, Figure 1 was introduced.

As illustrated in Figure 1, the Designer-Generative AI Product Design Ideation Process entails sharing roles between designers and Generative AI tools for a stepwise progression in product design. Each stage at which designers can leverage assistance from Generative AI during the ideation process was defined, with new roles allocated to each individual in accordance with the process. In the define phase, designers provide reference image prompts to Generative AI tools like Midjourney, based on their hypothesis and design intentions, to extract keywords enabling designers to generate a diverse array of images aligned with their expectations. In the ideate phase, they employ a Positioning Map to adjust text prompts based on these keywords, facilitating the repeated creation of sophisticated images. Following this, designers analyze and adjust the images generated by Midjourney, archive them, and proceed to the sketch-driven image prompt stage. In this stage, Generative AI utilizes both image and text prompts for visualization, while designers continuously refine the design concept through evaluation and interpretation.

3. 1. Define

In this chapter, the focus is on deriving keywords to write to prompts based on design hypotheses and intent. It is important to set clear design directions for designers by considering the project’s objectives, target audience, and fundamental design concepts, which play a vital role in the project. Building upon these initial considerations, designers gather target images necessary for deriving key keywords to visually express the fundamental concepts and direction of the design. Each image encapsulates elements intended by the designer either shape or CMF. In preparation for keyword derivation through Generative AI in the subsequent step, each image is meticulously curated to the composition of an image collection. Through the process, a methodology has been devised to facilitate the collaborative development of creative ideas between designers and Generative AI in the ideation phase, ensuring that the generated images maintain proximity to the expected values while exploring a diverse range of possibilities.

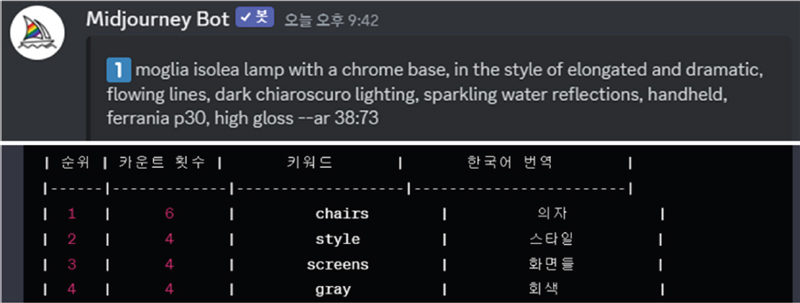

In the keyword extraction and refinement phase, both the Midjourney describe prompt and ChatGPT are employed. The describe prompt, as illustrated in the left image of Figure 2, generates descriptive sentences for supplied images. When an image is fed into Midjourney, it produces four descriptive statements, with the option for repetition. For refining important keywords from these outputs, as shown in the right image of Figure 2, ChatGPT is utilized for keyword ranking and selection, focusing on terms most aligned with the intended design concept.

This stage proceeds as follows:

- 1) Image Input: Images from the image collection are fed into the Midjourney Describe prompt.

- 2) Keyword Extraction: Midjourney offers sentence-based descriptions, producing four sentences per image.

- 3) Keyword Refinement: Midjourney’s outputs are used with Chat GPT for identifying and organizing pivotal keywords.

Utilizing this approach allows for the extraction of keywords expressed in Midjourney’s language, rather than human language, from the images in the image collection.

3. 2. Ideate: Idea Generation and Exploration

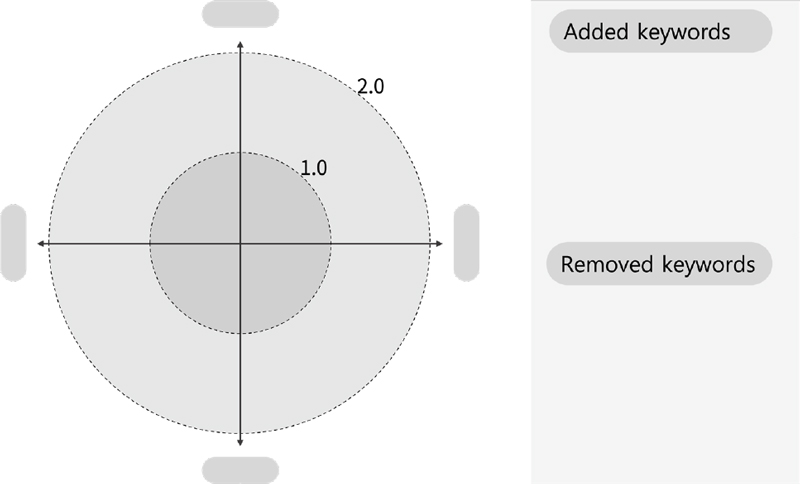

In the initial stages of image generation, understanding prompts is relatively straightforward. However, as prompts become longer and more complex, distinguishing semantically overlapping keywords becomes challenging. Positioning Maps, as illustrated in Figure 3, can resolve this issue by starting with selecting two main criteria closely related to the design concept and aligning them along respective axes. Keywords extracted from image collections are then mapped along these axes, with those further from the center indicating higher importance. This approach is vital because Midjourney interprets the first keywords in a prompt as the most important, thus clarifying keyword priorities for prompt formulation.

- The positioning map serves to:

- - Facilitate prompt refinement by enabling the addition or removal of keywords.

- - Identify and eliminate semantically overlapping keywords.

- - Assign importance to each keyword, emphasizing certain aspects over others.

This structured approach to keyword organization makes the prompt formulation process more systematic and intuitive, aiding in enhancing the clarity and relevance of the design concept.

In the Generative AI-based Product Design Process, various methods exist for effective prompt creation. Our study’s design process allows for the use of four methods, as outlined in Table 1: Additive prompting, Single Concept prompting, Category prompting, and Narrative prompting. These are selected based on specific stages and requirements of the design workflow. Additive prompting lists all desired elements separated by commas, reminiscent of search engine interactions, enabling quick and intuitive prompt generation, though longer prompts may become complex. Single Concept prompting focuses on one concept, suitable for initial ideas about themes, characters, or locations, but may require additional development for detailed design. Category prompting organizes elements using commas, pipes, or semicolons, and assigns category names, aiding in automation and simplifying prompt adjustments in intricate designs. Narrative prompting, used in a prose style, effectively captures the emotional essence of scenes, ideal for exploration but challenging in analysis and control. Designers can select and utilize a prompt method based on what stage of the design process they are in, the type of product design they are undertaking, whether the focus is on user experience or obtaining concept ideas for product details.

The process during this phase involves reviewing images generated in the previous step and selecting specific elements for archiving. Given the limitations of Generative AI in accurately executing a designer’s vision, designers identify serendipitous elements of inspiration within the images created by Midjourney during the ideation phase. These elements subsequently guide the refinement of the concept.

Utilizing image archives from the previous phase, this step focuses on creating design concept sketches with elements inspired by collaborative works with Generative AI. This phase allows designers to refine and concretize initial design concepts based on the inspirations drawn from Generative AI.

4. Elaboration of the Generative AI Collaboration Process via Workshops

4. 1. Purpose and Methodology

To concretize and enhance the Designer- Generative AI Product Design Ideation Process, a workshop was facilitated with six participants, as detailed in Table 2.

4. 2. Proposing Generative AI Tool Usage in Each Stage

The workshop was designed to enable designers to develop screen design ideas closely related to the digital lifestyle of Generation Z utilizing the Designer-Generative AI Product Design Ideation Process. Throughout this process, the use of Generative AI tools plays a central role in realizing creative ideas and exploring design solutions that reflect the unique digital experiences of Generation Z users. The workshop focuses intensively on the process of conceptualizing screen designs that align with the lifestyle of Generation Z, based on insights gained through interaction with Generative AI. It aims to investigate the positive role of collaboration with Generative AI in this process, as well as to identify limitations and challenges.

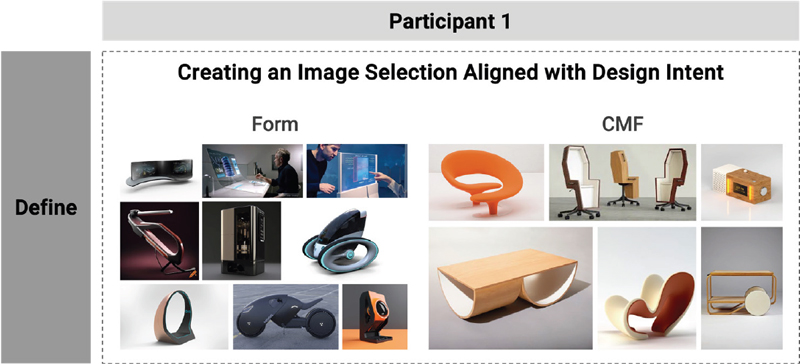

1) Creating an Image Collection Aligned with Design Intent

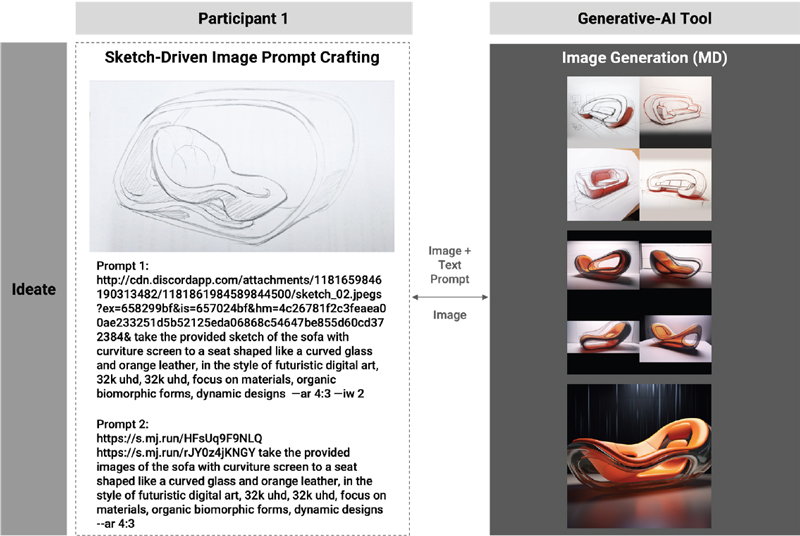

Participants identified screen design challenges from various perspectives, sourcing reference images and constructing image collections. Participant 1, focusing on the Generation Z’s comfortable viewing experience, selected images with organic lines for their shape aspect, as illustrated in Figure 4.

For the CMF aspect, image collections were composed of elements that evoke comfort to enhance Generation Z’s screen usage experience. For instance, materials such as wood and fabric, though not directly applied to screen design, are considered essential in reflecting Generation Z’s demand for creating household ambiance and expressing personal preferences, rather than solely serving as a medium for conveying information. The analysis and insight discovery were categorized into roles attributed to designers, and the results of the lifestyle analysis of Generation Z were determined by each designer accordingly.

2) Input Image Collection and Result Analysis

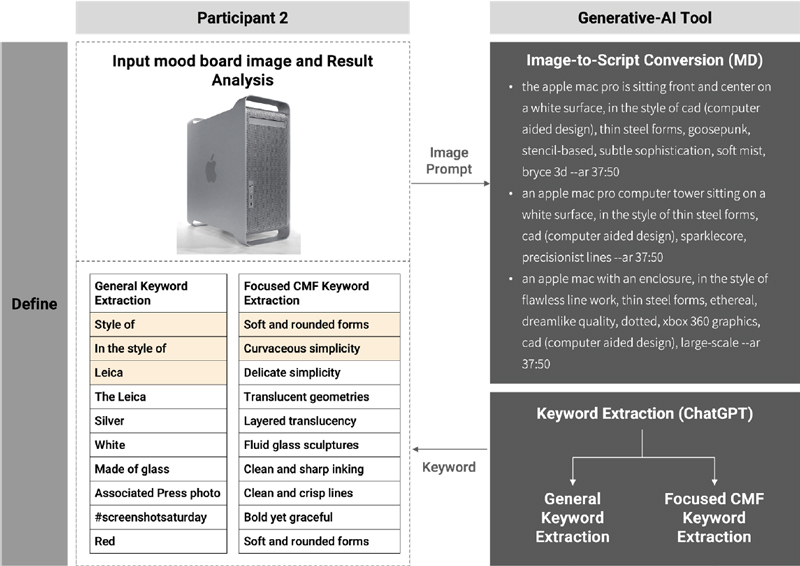

During this stage, participants employed Midjourney’s describe prompt to extract AI-crafted descriptive phrases from image collection visuals. ChatGPT analyzed these phrases for keyword quantification. For participant 2, unexpectedly, non-design-centric keywords like ‘style of’ and ‘Leica’ emerged significantly, as depicted in Figure 5. To refine the analysis, participant 2 defined CMF-focused criteria for keyword identification. This approach yielded more pertinent keywords, such as ‘Soft and rounded forms’ and ‘Curvaceous simplicity’.

1) Mapping Keywords to Determine Prompt Priority in Prompt Crafting

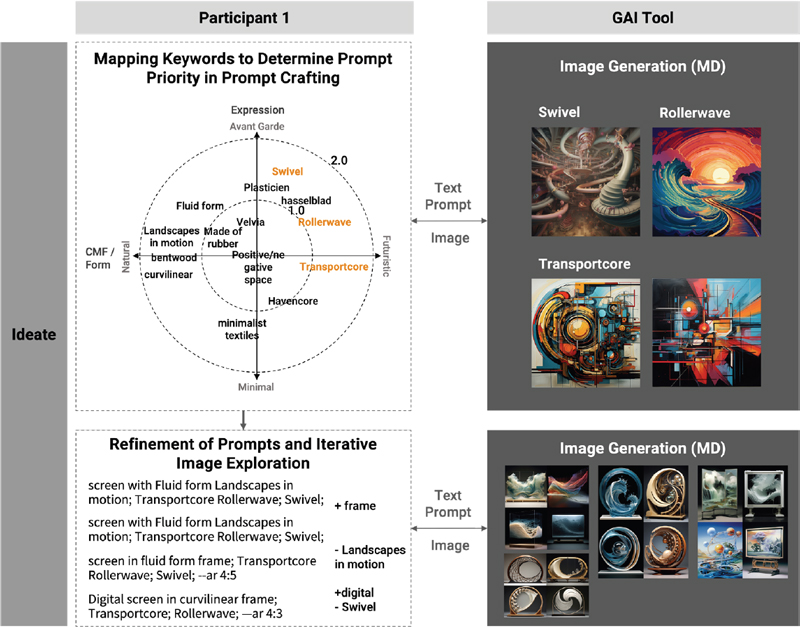

This phase enhances the accuracy and relevance of prompts by mapping keywords on the Positioning Map. Participant 1 discovered novel words like ‘Rollerwave’, ‘Transportcore’, and ‘Swivel’ among the extracted keywords. As shown in Figure 6, participant 1 used these keywords to generate images in Midjourney.

By employing the created images as image prompts in conjunction with text prompts, they were able to use images to articulate difficult-to-describe keywords, leading to more precise outcomes.

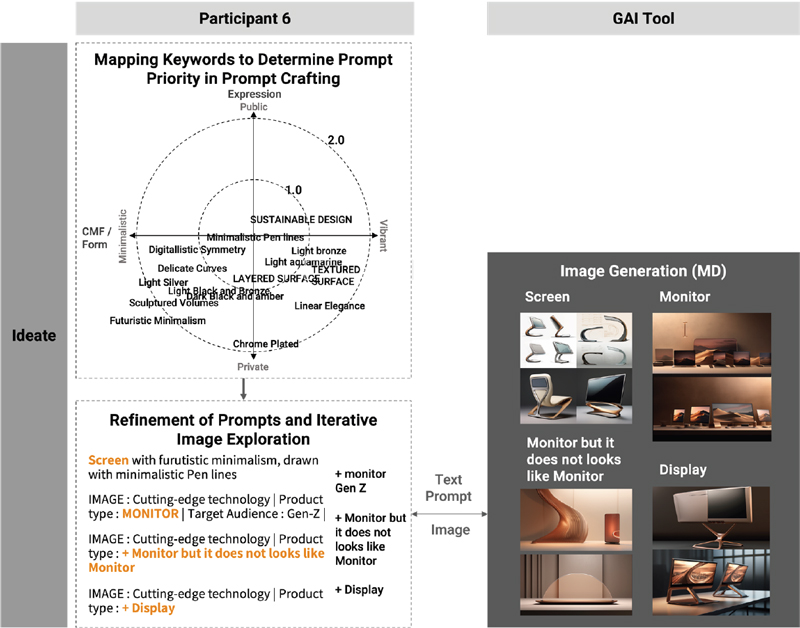

Participant 6, as illustrated in Figure 7, initially did not produce satisfactory images with the keyword ‘screen’ and then shifted to ‘monitor’, but still failed to achieve the desired results. They later attempted a more abstract keyword, ‘Monitor but it does not look like a monitor’, and finally switched to ‘display’, persisting in a repetitive search to find the desired image.

2) Refinement of Prompts and Iteration Image Exploration

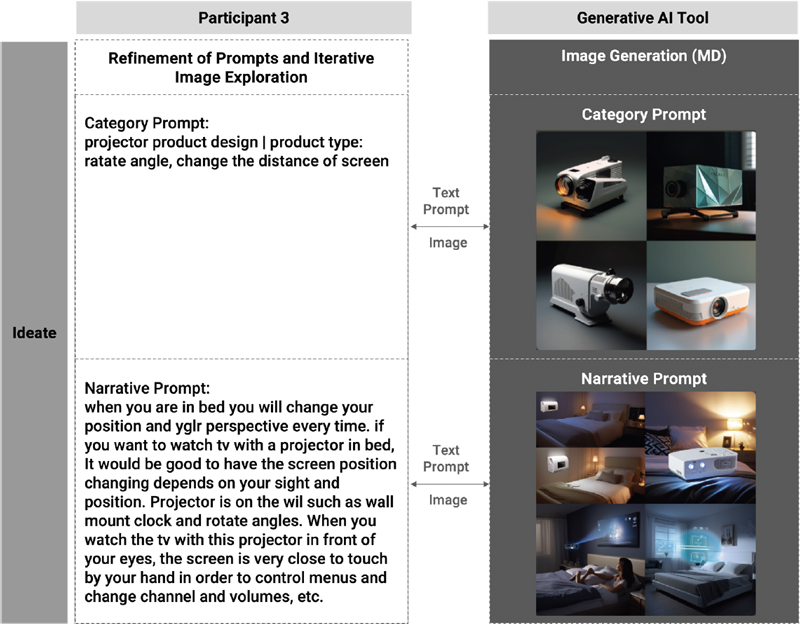

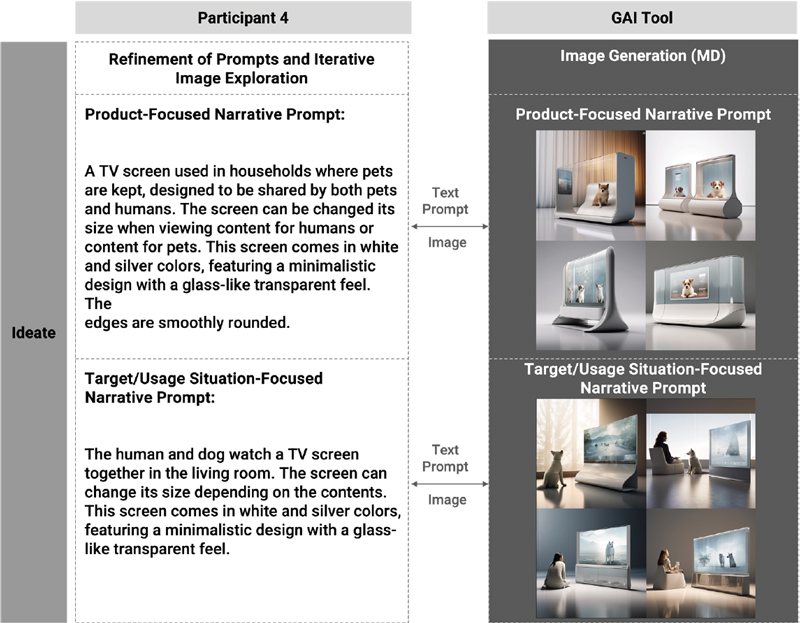

In this phase, participants explored different types of prompts for generating desired images. Participant 3, as illustrated in Figure 8, employed both Category prompting and Narrative prompting methods. The use of Category prompting effectively generated images focused on product design, while Narrative prompting produced images related to user experiences and scenarios. Participant 4 utilized the Narrative prompting method to generate images with two different focuses: product-centric and usage scenario-centric. As shown in Figure 9, for the product-centric prompt, ‘TV Screen’ was positioned first, prompting Midjourney to recognize it as the main element.

Conversely, in the target-centric prompt, ‘The human and dog watch a TV screen’ was used as the primary keyword to highlight the scenario of a human and a dog watching the TV screen. This approach assisted participant 4 in extracting design ideas centered on actual usage environments and scenarios.

Although participant 3 and Participant 4 adopted different approaches in prompt utilization, both demonstrated a range of methods for generating desired images through experimental prompt formulation.

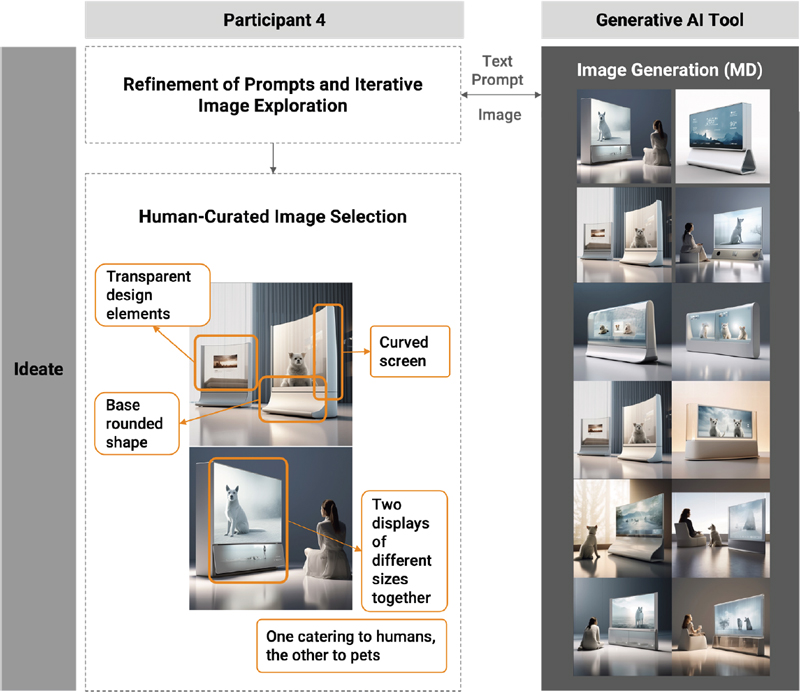

3) Human-Curated Image Collection

Participants extract and analytically assess design elements from generated images, concentrating on specific parts or features to refine and solidify ideas for their final design concept. Participant 4, as shown in Figure 10, combined the curved and multi-screen elements to create a multi-curved screen as a key design feature.

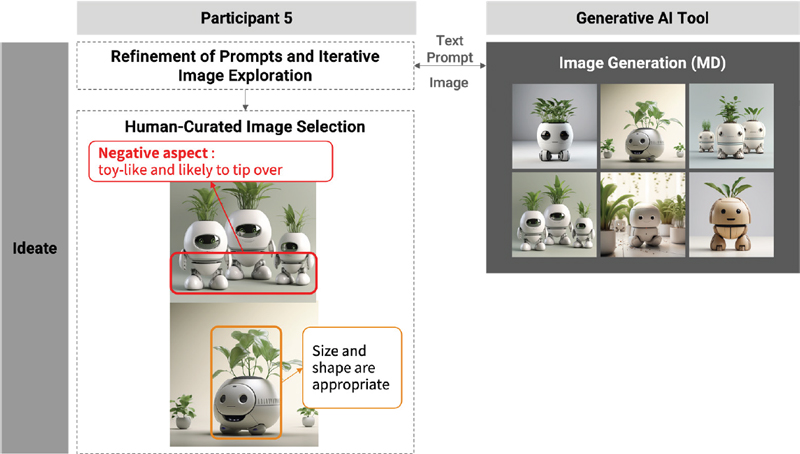

As shown in Figure 11, participant 5 marked not only positive elements but also negative aspects like ‘toy-like and likely to tip over’ in their analysis of the generated images. This approach allows designers to analyze generated images and further develop their ideas.

4) Sketch-Driven Image Prompt Crafting

In the concluding phase, sketches were formulated by amalgamating ideas from the image archive. These sketches served as image prompts to refine concepts regarding desired textures and shapes. The ‘-iw’ parameter was utilized to prioritize sketch image prompts over text prompts during image generation. Nevertheless, Midjourney faced limitations in discerning the composition or depth of sketches to incorporate additional details. Particularly, participant 1 faced challenges in achieving the envisioned prototype, particularly when integrating a transparent shell into a lounge chair sketch, as Midjourney could not differentiate the elements distinctly, as shown in Figure 12. This led to participants facing limitations in accurately validating the anticipated prototype outcomes.

4. 3. Results

The Designer-Generative AI Product Design Ideation Process was refined and augmented based on participant activities. The methods additionally employed by the participants to utilize generative AI within the mentioned processes, as well as the effectiveness of these collaborations with Generative AI in producing images that accurately reflect the designers’intentions, are evaluated. These findings are summarized in Figure 13.

The table in Figure 13 illustrates the approaches adopted by participants in each phase and gathers their feedback from post-workshop interviews regarding the current strengths and limitations of the process, along with the features they wish to see added in the future.

In the Define phase of the Designer-Generative AI Product Design Ideation Process, designers formulate hypotheses based on design intentions, inputting images from the image collection into Generative AI for keyword extraction via image-to-keyword conversion. Aligning with participant 2’s approach, specifying categories like CMF or environmental context can yield more relevant keywords. During the Ideation phase, designers engage in ‘Keyword Mapping to Determine Prompt Priority in Prompt Crafting.’ Participant 1 utilized unknown keywords extracted by Generative AI as image prompts, expanding the use of prompts beyond text to include images for desired outcomes. Participant 6 iteratively tested semantically similar keywords for image generation, emphasizing prompt refinement and iterative image exploration. This process requires supplying text prompts to the Generative AI Tool to generate targeted images. Various prompting methods, as employed by participants 3 and 4, facilitated targeting specific image types, product focuses, or contextual themes. In the final ideation stage, ‘Sketch-Driven Image Prompt Crafting’ encountered challenges in accurately achieving prototype outcomes with Midjourney, particularly highlighting the need for improvements in sketch utilization.

Participants commonly reported that utilizing Generative AI enabled them to conduct the design process more efficiently. However, the use of AI tools sometimes led to unexpected results, which prompted a reconsideration of their design approaches. They found that MidJourney and GPT helped derive unexpected keywords, aiding in the creation of accurate images. Some participants noted difficulties in grasping new contexts through AI use but stated that the generated images could be used as diverse reference materials, thereby increasing design process flexibility. For future improvements, there was a consensus on the need for developments in AI’s context analysis based on user input, creative prompt suggestions, and enhancements in the detailed depiction of AI-generated images.

5. Discussion

Following the collaboration process with Generative AI, participants frequently cited the rapid visualization of ideas as a key benefit of Generative AI. However, to fully harness the potential of Generative AI, it is crucial to bridge the gap between individuals’ expectations and the images generated (Han et al., 2023). To accomplish this, crafting a structured prompt is essential. Consequently, this study explored prompt crafting through a method that combines keyword extraction using Generative AI with a positioning map. During the workshop, it was observed that some participants employed a method to refine keywords in detail by inputting them into Midjourney and some focused on extracting keywords specifically related to CMF. This approach demonstrated that Generative AI can be effectively used to further structure and refine keywords for more precise image generation.

Despite the structured keyword system, it emerged that recognizing prompts for innovative product usage forms was challenging due to the inherent characteristics of Generative AI tools. Owing to their capability to autocorrect typos or language nuances (Heller, 2023), these tools often fail to generate accurate images for unconventional scenarios. This indicates that envisioning novel product usage, like ceiling-mounted screens, necessitates fresh approaches to keyword coordination and prompt formulation.

Moreover, Midjourney showed limitations in partial modification of the generated designs. Adjusting minor portions of an existing model to the desired extent proved to be nearly impossible. While Generative AI tools like Midjourney can be beneficial in the initial phases of product design, it is deemed more suitable to employ conventional 3D modeling tools or AI tools that are better aligned with them for finalizing the design and making detailed modifications. Therefore, the addition of features allowing for the modification of numerical values for partial corner radius of the generated product images or the generation of back and side views for the same product image, ultimately leading to 3D rendering, is expected to further enhance the product design process. Such enhancements can be complemented by utilizing sketches as input (Zhang et al., 2023), generating specific images through product image archiving (Suessmuth et al., 2023), real-time design modification using Generative AI (Krea, n.d.), shaping into 3D models (Nichol et al., 2023), and employing Generative AI for human collaboration (Shin et al., 2023; Verheijden et al., 2023), among other approaches, tailored to various purposes and methodologies. Therefore, in subsequent research, various possibilities for more efficient and creative collaboration between designers and Generative AI can be explored by collaborating with suitable Generative AI tools at each stage of the design process, departing from traditional methods.

In this study, AI played a significant role as a collaborative tool harnessing idea generation capabilities. However, there are instances where AI can replace design decisions made by human designers with data-driven processes. Depending on the dataset referenced, the outcomes may vary, potentially enabling a more diverse collaboration based on the dataset’s format. Consequently, designers may need to understand the dataset’s characteristics and utilize datasets with various attributes to achieve their design objectives. Thus, this study serves as a starting point for future research focusing on exploring collaboration strategies between designers and AI to fulfill designers’ intentions.

6. Conclusion

In this study, a Designer-Generative AI Product Design Ideation Process was proposed to assist in fostering creative ideation during the product design. The process encompasses structuring prompts to generate images that align with the designer’s intent and utilizing the generated images to concretize ideas through collaboration between the designer and Generative AI. Furthermore, this process was further embodied through workshops, and subsequent examination delved into the advantages and limitations of collaboration, as well as areas for further advancement.

To bridge the gap between designers’ expectations and the images generated by Generative AI, the model was developed involving the extraction of keywords from an image collection using Generative AI and their subsequent mapping based on significance. The process of meticulously refining the prompt—by assessing the impact of keyword’s significance on the generated image and then integrating these insights back into Generative AI—proved to be beneficial for future prompting strategies. The subtle variations in the images generated by different keyword orders or combinations highlighted the need for iterative exploration to achieve the desired outcome. However, the unexpected images generated during this process also served as elements of the idea.

Throughout this investigation, it was evident that the ideation method through collaboration with Generative AI helps generate diverse ideas and concretize design concepts. This implies that among the roles of designers in the ideation process, prompt crafting skills can also become increasingly important.

Acknowledgments

This work was supported by the Ministry of Education of the Republic of Korea and the National Research Foundation of Korea(NRF-2023S1A5A2A03084950)

The first author(Jungryun Kwon) and the co-authors(Eui-Chul Jung) contributed equally to this work.

Notes

Copyright : This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-Commercial License (http://creativecommons.org/licenses/by-nc/3.0/), which permits unrestricted educational and non-commercial use, provided the original work is properly cited.

References

-

Cai, A., Rick, S. R., Heyman, J. L., Zhang, Y., Filipowicz, A., Hong, M., ... & Malone, T. (2023, November). DesignAID: Using Generative AI and Semantic Diversity for Design Inspiration. In Proceedings of The ACM Collective Intelligence Conference (pp. 1-11).

[https://doi.org/10.1145/3582269.3615596]

-

Cheng, S. H. (2023). Impact of Generative Artificial Intelligence on Footwear Design Concept and Ideation. Engineering Proceedings, 55(1), 32.

[https://doi.org/10.3390/engproc2023055032]

-

Chiou, L. Y., Hung, P. K., Liang, R. H., & Wang, C. T. (2023, July). Designing with AI: An Exploration of Co-Ideation with Image Generators. In Proceedings of the 2023 ACM Designing Interactive Systems Conference (pp. 1941-1954).

[https://doi.org/10.1007/978-3-030-78462-1_13]

-

Chung, J. (2022). Case study of AI art generator using artificial intelligence. trans, (13), 117-140.

[https://doi.org/10.23086/trans.2022.13.06]

- Crisp, B. (2021, August 11). Mural or Miro: A Design Thinker's Guide. Design Thinkers Academy London. Retrieved January 26, 2024, from https://designthinkersacademy.co.uk/blogs/media/mural-or-miro-a-design-thinkers-guide.

-

Gaver, B., & Bowers, J. (2012). Annotated portfolios. interactions, 19(4), 40-49.

[https://doi.org/10.1145/2212877.2212889]

- Geyer, V. (2022, December 12). Reinventing the Wheel? "FelGAN" Inspires New Rim Designs with AI. Audi MediaCenter. Retrieved January 28, 2024, from https://www.audi-mediacenter.com/en/press-releases/reinventing-the-wheel-felgan-inspires-new-rim-designs-with-ai-15097.

-

Han, D., Choi, D., & Oh, C. (2023). A Study on User Experience through Analysis of the Creative Process of Using Image Generative AI : Focusing on User Agency in Creativity. The Journal of the Convergence on Culture Technology (JCCT), 9(4), 667-679.

[https://doi.org/10.17703/JCCT.2023.9.4.667]

- Heller, M. (2023, August 14). The Engines of AI: Machine Learning Algorithms Explained. InfoWorld. Retrieved January 25, 2024, from https://www.infoworld.com/article/3702651/the-engines-of-ai-machine-learning-algorithms-explained.html.

- Joibi, J. C., Yun, M. H., & Eune, J. (2023, May). Exploring the Impact of Integrating Engineering, Product, and Affective Design Semantics on the Performance of Text-to-Image GAI (Generative AI) for Drone Designs. In Proceedings of KSDS Spring International Conference, 120-125.

-

Koch, J., Lucero, A., Hegemann, L., & Oulasvirta, A. (2019, May). May AI? Design ideation with cooperative contextual bandits. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (pp. 1-12).

[https://doi.org/10.1145/3290605.3300863]

-

Liu, V., & Chilton, L. B. (2022, April). Design guidelines for prompt engineering text-to-image generative models. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems (pp. 1-23).

[https://doi.org/10.48550/arXiv.2109.06977]

-

Liu, V., Vermeulen, J., Fitzmaurice, G., & Matejka, J. (2023, July). 3DALL-E: Integrating text-to-image AI in 3D design workflows. In Proceedings of the 2023 ACM designing interactive systems conference (pp. 1955-1977).

[https://doi.org/10.1145/3563657.3596098]

-

Muller, M., Chilton, L. B., Kantosalo, A., Martin, C. P., & Walsh, G. (2022, April). GenAICHI: generative AI and HCI. In CHI conference on human factors in computing systems extended abstracts (pp. 1-7).

[https://doi.org/10.1145/3491101.3503719]

- Roettgers, J. (2023, March 26). Ikea's Generative AI Furniture Designs Are Trippy, Retro, and Inspiring. Fast Company & Inc. Retrieved January 28, 2024, from https:// www.fastcompany.com/90871133/ikea-generative-ai-furniture-design.

-

Sbai, O., Elhoseiny, M., Bordes, A., LeCun, Y., & Couprie, C. (2018). Design inspiration from generative networks. Cornell University.

[https://doi.org/10.48550/arXiv.1804.00921]

- Straker, D. (2009). Rapid problem solving with post-it notes. Da Capo Lifelong Books.

-

Weisz, J. D., Muller, M., He, J., & Houde, S. (2023). Toward general design principles for generative AI applications. arXiv preprint.

[https://doi.org/10.48550/arXiv.2301.05578]

-

Wu, Z., Ji, D., Yu, K., Zeng, X., Wu, D., & Shidujaman, M. (2021). AI creativity and the human-AI co-creation model. Proceeding of HCII 2021 (pp. 171-190).

[https://doi.org/10.1007/978-3-030-78462-1_13]