Do Computers Have Gender Roles?: Investigating Users’ Gender Role Stereotyping of Anthropomorphized Voice Agents

Abstract

Background The gender of commercialized voice agents has been designed and developed as female as the default, which can lead users to reinforce gender stereotypes. Since the gender stereotypes relate to gender role judgement, we aim to investigate whether voice agents induce gender stereotyping from users with empirical evidence.

Methods The online survey was conducted to discover the extent of gender role stereotyping by users based on the gender appropriateness of the user commands for voice agents with 110 female and 82 male participants. To this end, we developed a set of user commands whose topics were related to gender role stereotypes and asked participants whether they thought the presented user commands were more suitable for a male or female agent. Through an online survey, participants were asked to rate how much suggested user commands are appropriate to a male or female agent on a 7-point Likert scale.

Results The results showed that participants expected that a female agent would perform better when they requested female-trait-based user commands and the same applied to a male agent and male-trait-based user commands. Also, female participants had a more pronounced tendency to apply female-trait-based user commands to female agents than male participants did to male agents.

Conclusions Based on the results, we suggest some strategies for designing anthropomorphized AI agents to prevent gender bias or stereotyping. Some future works should consider empirical methods that voice agents can be designed to mitigate reinforcing gender stereotypes. The present and future study will provide a novel viewpoint in designing anthropomorphic voice agents to prevent gender stereotyping.

Keywords:

Voice Agents, Gender Roles, Anthropomorphism, Human-Computer Interaction1. Introduction

As artificial intelligence (AI)-based voice agents are developed, user expectations of the agent role are growing from performing voice commands to having conversations (Luger, & Sellen, 2016). Alongside leading companies, such as Google, Apple, Amazon, and Microsoft, Korean companies, SK Telecom, Samsung Electronics, KT, and Kakao, have developed voice agents and entered the market. As the types of voice agents have begun to vary, the scope of functions that can be employed is also expanding. Previously, simple functions, such as playing music, ordering products, and searching for information, have been popular, but more recently, functions have expanded to include reading fairy tales (Xu, Y., & Warschauer, 2019), learning foreign languages (Pastrovich, 2017), or even booking a restaurant reservation (Vincent, 2021). The popularization of voice agents has raised questions about the gender of the agents. Most of the currently commercialized voice agents were initially developed with a female voice as the default. Table 1 indicates that representative global agents, such as Amazon’s Alexa, Apple’s Siri, and Microsoft’s Cortana, and Korean agents, SK Telecom’s Aria, Samsung Electronics’ Bixby, and Naver’s Sally for instance, were all developed with a female voice and even named after women (Lee, 2018). For example, Siri’s name is a Scandinavian female name that means “beautiful woman who leads you to victory” (Abercrombie, Curry, Pandya, & Rieser, 2021).

If a voice agent is designed as female, it can lead to gender stereotyping (Eagly, & Wood, 1999) because the description of the company’s voice agent includes the concept of a digital assistant, which enforces the gender stereotype of an assistant. In other words, the role of an office assistant has historically been undertaken by women and thus the labor is gendered female. Due to the gendering of the labor as female, having a digital assistant who sounds normatively like a woman can be problematic. Thus, voice agent developers adhere to existing gender stereotypes or even produce new stereotypes when designing agents. For instance, a previous study revealed that the use of female-voice agents induces both short- and longterm gender discrimination such as expecting more quick response to female subordinates compared to males (Weisman & Janardhanan, 2020). Also, the study of United Nations reports that female-voice agents can reinforce prevalent harmful gender stereotypes (West, Kraut, & Chew, 2019). The study describes that voice agents induce gender discrimination by perceiving male voices as authoritative while females are helpful. Moreover, the authors discovered that voice agents with female voices are exposed to sexual harassment. Indeed, an AI chatbot designed as a twenty-year-old female character from South Korea called ‘Lee Luda’ was dismissed because of sexual harassment messages from users (Kim, 2021).

Considering the previous study, the biased design of voice agents based on gender stereotypes can cause discrimination. In this regard, Apple announced that users would be able to select the type of voice for their Siri when setting up their phones (Fournier-Tombs, 2021). Similarly, Samsung provides various voice style for voice agent Bixby to allow users set up their own Bixby (Samsung, 2023). On the other hand, Google does not support users to choose the voice of Google Assistant (Google, 2023). The voice and gender of Google Assistant is automatically selected by the language. The differences of voice settings policy between global leading companies reveals that a design standard for voice agents considering gender stereotyping is needed. Nevertheless, existing studies have focused on the agent’s response to the user’s requests rather than on the user’s cognition for voice agents as social interlocutor, especially in gender perspective (Søndergaard, & Hansen, 2018; Hwang, Lee, Oh, & Lee, 2019). Thus, there is a lack of detailed research on how user expectations can vary depending on the agent’s gender.

Therefore, in this study, we investigated how the gender of anthropomorphized voice agents can affect the user’s gender stereotyping of the agent. To this end, we reviewed previous studies on the social roles of gender and voice agents. We then conducted an online survey of 192 people to discover how users perceive the appropriateness of voice commands for each gender of anthropomorphized voice agents. In the following sections, we first introduce social role theory and previous research on voice agents, then describe and discuss the details of the online survey and its results. In discussion, we review the results of the survey and present some design guidelines for voice agents to reduce gender stereotyping induced by using anthropomorphized voice agents. Finally, the future work and conclusions will be described. We expect that this study will reveal the tendency of gender stereotyping of people on voice agents. Simultaneously, we also expect designers of AI-based voice agents would be able to identify some important issues related to the gender stereotypes or discrimination and inspired to design voice agents appropriately with some design implications from the study.

2. Related Works

Most voice agents were presented as intelligent virtual personal assistants (Loideain, & Adams, 2020). To explain why voice agents were chosen to be female assistants, the social role theory was reviewed. According to the social role theory, people expect and define certain characteristics based on the activities performed by women and men in society (Eagly, 2013). Social role theory also explains that historically in Western societies, men were more engaged in higher power and status tasks, and women were primarily assigned parenting roles, creating stereotypes that were more suited to men’s agency and women’s communion. For an example, it is natural for male to be perceived as doctors rather than nurses, but they tend to be perceived on the contrary (Wilbourn & Kee, 2010). This forms gender roles, and the typical gender roles arise from the jobs of each gender. In other words, the characteristics needed to perform a task are what trigger gender stereotypes. The division of labor has decided that women should perform domestic or subordinate behavior and men should perform dominant behavior and resource acquisition (Eagly, & Wood, 1999). However, these expectations could lead to confirmation bias which means that people do not consider the other cases such as female doctor and male nurse (Tinsely, & Ely, 2018). In other words, expectations of gender can lead to evaluations of behavior that conform to gender roles, which can be recognized as inappropriate if they are not in the gender role frame (Brescoll, Dawson, & Uhlmann, 2010).

People are subconsciously aware of gender, even if the subject is a computer, under the paradigm of computers being social actors (Nass, Steuer, & Tauber, 1994). According to a previous study, people responded differently to robots based on their perceived gender and tried to use more words when communicating to a robot of the opposite gender than a robot of the same gender (Powers et al., 2005). Not only did people recognize gender in computers, but they also assigned social roles based on the perceived gender of the computers. In other words, once people perceive computer agents as anthropomorphic, gender stereotyping can be applied to them. Nass et al. revealed that computer agents presented as male were rated as knowing more about technology-related topics, whereas computer agents presented as female were rated as knowing more about love and relationships (Nass, Moon, & Green, 1997). In another study, male-voiced computers were subjectively rated as more friendly than female-voiced ones (Nass, & Moon, 2000), which suggests that people have different expectations of the gender roles not only for humans but also for computer agents. These findings indicated that voice agents can be used unintentionally as tools to strengthen fixed social roles based on gender (Robertson, 2010).

3. Methods

As noted above, people ascribe different social roles depending on the subject’s gender, and this can be applied to computer systems. To obtain empirical evidence, we conducted an online survey on how users have formed gender role stereotypes about the voice agents currently in use. In order to investigate how people consider recently commercialized voice agents in gender stereotyping perspective, we chose survey methodology with text-based voice commands instead of voice interactions with agents. It’s because that the voice characteristics of agents can vary the extent of perceived gender stereotyping based on individual differences. The survey was conducted online for a week via Facebook ads in South Korea. A total of 192 people (110 women and 82 men; binary selected) participated in the online survey, and the average age was 23.25 (SD = 6.37). All participants were South Korean whose mother language is Korean. The content of the questionnaire consisted of three main categories. The first category concerned personal information, asking about the age and gender of participants and their experience using voice agents. The second category comprised questions about the gender appropriateness of user commands for voice agents. The last category consisted of questions assessing the extent of the sexism of the individuals. The survey took about seven minutes, and ten participants were given Starbucks vouchers of 5,000 Korean won value through a raffle.

The questionnaires for evaluating the gender appropriateness of user commands for agents were prepared in reference to Table 2, which outlines male and female traits from the previous research on the gender of robots (Eyssel, & Hegel, 2012). Based on the gender stereotypical traits and tasks presented by Eyssel and Hegel, we designed a list of user commands for voice agents from the manual of a commercial voice agent service (SK Telecom, 2019). For an example, a user command “Tell me the information for a recent gaming laptop” was developed based on the one of male stereotypical tasks, servicing equipment. As a result, 65 user commands were written. Based on these user commands, participants were asked to respond to questions on a seven-point Likert scale (1: definitely appropriate for male agents to 7: definitely appropriate for female agents) to judge the gender of the voice agent that would likely offer more appropriate answers for each user command. The exact expression of question was “Considering the gender of voice agents who are likely to give the right answer for each user command, please mark on the closest score among 1 to 7 points (1 point: Male-----7 point: Female).”

Although we listed user commands reflecting gender stereotypical traits and tasks from the previous study, participants were encouraged to select the gender-neutral answers (which is 4 on a seven-point Likert scale) if they had no gender role stereotype of voice agents. On the other hand, there were two groups (male and female) for user commands that could be differentiated significantly compared to the gender-neutral answer if participants had stereotypes of the gender roles of voice agents.

4. Results

For the data analysis, the scale validity was verified by first conducting a confirmatory factor analysis and reliability test to discover whether user commands for voice agents can be divided into male- and female-trait factors. Next, a one-sample t-test was conducted for male- and female-trait-based user commands to determine if they could be differentiated significantly to gender-neutral commands. Afterward, an independent sample t-test for male and female participants verified differences in participants’ gender role stereotyping regarding the user commands. Finally, to determine whether the individual sexism and age also affected the extent of gender stereotyping of voice agents, two multiple linear regressions were conducted for both male- and female-trait-based user commands.

At first, to establish that user commands can be classified into two groups, a factor analysis was conducted using the principal component analysis and the varimax rotation method with Kaiser normalization. The user commands for which factor loading was less than 0.4 (sixteen user commands) were excluded following the study by Yong and Pearce (2013). In addition, a one-sample t-test was performed to find user commands that are significantly different from 4, the gender-neutral value. As a result, user commands for voice agents could be divided into two factors. Table 3 and Table 4 reveals that user commands from M1 to M22 can be considered male stereotype-inducing commands, whereas F1 to F27 can be classified as inducing female stereotypes, since the mean value lower than 4 indicates male appropriateness and higher than 4 describes female one. Each user command group revealed high reliability (Cronbach’s α = .933 and .911 for male and female groups, respectively). Examples of user commands classified as male stereotyping were “Tell me the schedule for today’s Arsenal [an English Premier League football team] match” or “Tell me the information for a recent gaming laptop.” Examples of female user commands were “Order fabric softener” and “I’d like to make a malatang [a spicy Chinese soup], so let me know the recipe.” Full data for the user commands for M1–M22, F1–F27 and the other sixteen commands are provided in the Appendix.

Results of the descriptive statistics, t-test, and confirmatory factor analysis to divide user commands based on male traits

Results of the descriptive statistics, t-test, and confirmatory factor analysis to divide user commands based on female traits

Second, to verify how the personal characteristics affect the gender role stereotyping of voice agents, several moderating factors, such as gender, age, and individual sexism, were investigated. First, independent sample t-tests were conducted to determine whether any difference exists in gender role stereotyping on voice agents between male and female participants. To this end, we calculated the mean and standard deviation for the survey responses to the user commands in M1–M22 (male-trait-based) and F1–F27 (female-trait-based) commands. Table 5 displays user commands that are expected for the male role, which exhibited no significant difference in gender stereotyping on voice agents between male and female participants (t(190) = 1.96, p > .05). In contrast, gender stereotyping on voice agents induced by female-trait-based user commands exhibited a significant difference between the male and female participants (t(190) = 2.39, p < .05).

4. Discussion

Through the online survey and data analysis, we derived two findings. First, participants exhibited gender role stereotypes toward computer agents who do not have an inherent gender about user commands which were developed in the present study based on the prior study of gender role stereotypes (Eyssel, & Hegel, 2012). This finding suggests that people can form gender stereotypes not only from humans, but also from anthropomorphized digital agents with gender. Notably, the results of the survey show that the extent of gender role stereotyping toward a computer agent is powerful, regardless of the recent global trend of feminism in South Korea represented by #MeToo (Gill, & Orgad, 2018). Second, the participants’ gender role stereotyping towards computer agents depended on their gender. As the result of the study, the recognition of gender role stereotypes is more pronounced in female roles and female participants. This finding relates to the modern sexism that Swim et al. noted (Swim, Aikin, Hall, & Hunter, 1995). Modern sexism, characterized by denials or attenuation of gender discrimination (Sarrasin, Gabriel, & Gygax, 2012), hostility toward women, and the absence of policies to help women, has forced women into a more gender-discriminatory environment than men. Considering the characteristics of modern sexism, women could have been forced to be aware much more of their gender roles compared to men which may entail the results of the present study. Nevertheless, this result must be re-verified based on participants from other countries or cultures, since the results may vary according to the level of gender equality of the society.

The gender stereotypes identified in this study could become more widespread and problematic in the future. With the increasing applicability of generative AI, which can autonomously generate content such as text and images based on large language models, various applications have been released. Examples include tutor agents assisting in foreign language education (Marr, 2023) and colleague agents capable of sharing workload in corporate tasks (Schwartz, 2023). Considering these developments, there is a risk that anthropomorphized agents may reinforce gender stereotypes while interacting with users in various situations as AI agents continue to advance. Therefore, we aim to propose design guidelines for AI agents that can minimize gender stereotypes.

Firstly, it involves not revealing the agent’s gender or presenting it in a gender-neutral form. This approach entails excluding design elements that disclose specific traits such as the agent’s name, visual appearance, or voice during interactions with users, minimizing the level of anthropomorphism. This method is particularly suitable for cases when agents primarily interact with users in a task-oriented manner rather than focusing on relationships, especially in text-based interactions. Examples of this include OpenAI’s ‘ChatGPT’ and Microsoft’s ‘Bing.’ It’s important to note that agents interacting with users including features such as voice or visual appearance may be less preferred by users when designed to be gender-neutral or ambiguous compared to instances where gender is clearly presented (Yeon, Park, & Kim, 2023). Hence, it may be more appropriate to employ such approaches in contexts where minimizing anthropomorphism is desirable.

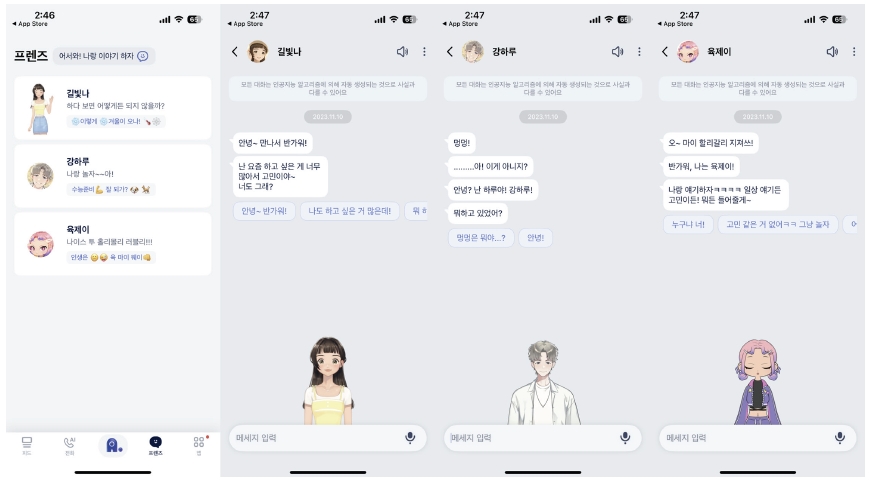

The second strategy is utilizing agents based on diverse characters with different personalities, ensuring that agents performing specific roles are not limited to a single gender, either male or female. This strategy can be applied in situations where agents need to interact using voice or when the agent’s appearance is included in the design. Additionally, it is effective in situations where the level of anthropomorphism is high. An example of this approach is evident in the case of ‘A.’ released by SK Telecom (Sharma, 2023). In ‘A.,’ as depicted in Figure 1, an interface is provided that allows users to interact with agents possessing various characters. By employing a variety of agents within one service, users can choose agents based on their preferences, potentially preventing the reinforcement of stereotypes.

Lastly, it could be considered that users personally customizing their own agents based on their preferences through an initial setup process, creating unique agents tailored to individual tastes. This method is akin to the relatively high degree of freedom users have when directly controlling the physical appearance, voice, style, or abilities of their avatars when creating avatars in computer games. If users can directly design the agent they will interact with through initial settings, there is a high likelihood of generating diverse agents for each user. However, in this case, the degree of freedom in the interface for configuring the agent may play a crucial role in determining the effectiveness of this approach.

Despite the interesting findings of the study, there are some limitations. The survey did not present any voice of agents before participants responded to the questions. For this reason, the results might be biased. In other words, participants’ gender stereotyping toward voice agents may come from their pre-existing gender stereotyping by human perspective even if the survey explicitly mentioned voice agents-based evaluation. If the gendered voice delivered to participants, they would perceive the agent’s gender apparently. Interestingly, participants revealed their gender role stereotype regarding voice agents even they have no further information of voice agents especially related to task performance. Also, the set of user commands used in this study has not been validated by any previous studies. Instead, we developed the list of user commands that can be classified into two groups with criteria of gender role appropriateness based on the previous study of gender role stereotypes. The set of user commands that can be perceived as gender-role-biased should be replicated in the following studies. In addition, the preference of female voice agents might be biased since most of participants might have experienced female voice agents first. Therefore, in following studies, it is required to empirically verify how gender stereotypes about voice agents identified through this study affect actual users’ experience with voice agents, especially in user satisfaction or preference. Furthermore, it is suggested to study how to design voice agent services to prevent gender stereotypes of voice agents and the resulting decline in user satisfaction.

5. Conclusion

In this study, we found gender stereotypes in voice agents among male and female users through online survey with user commands developed from gender stereotyping traits and tasks. Especially, female participants had more strict gender stereotype in female voice agents compared to male participants. Despite some limitations, we anticipate that the types of voice commands identified in this study, associated with inducing gender stereotypes, could be valuable in following studies which include evaluations of AI agents related to gender biases. Simultaneously, the design guidelines for anthropomorphized AI agents we have proposed to prevent gender stereotypes are expected to be useful for design practitioners in creating new agents that are free from gender bias. As people can easily apply their personal expectations of human beings into anthropomorphized agents as interlocutors of social interaction, this human trait induces positive consequences in many cases, such as social support (Torta et al., 2014) and attachment (Banks, Willoughby, & Banks, 2008). However, there are some side effects as well such as the gender role stereotyping, as this study discovered. Considering this, designers, developers and even IT companies should be aware of the unintended consequences so that they can develop agents for gender equality.

Notes

Copyright : This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-Commercial License (http://creativecommons.org/licenses/by-nc/3.0/), which permits unrestricted educational and non-commercial use, provided the original work is properly cited.

References

-

Abercrombie, G., Curry, A. C., Pandya, M., & Rieser, V. (2021). Alexa, Google, Siri: What are your pronouns? Gender and anthropomorphism in the design and perception of conversational assistants. arXiv preprint arXiv:2106.02578.

[https://doi.org/10.18653/v1/2021.gebnlp-1.4]

-

Banks, M. R., Willoughby, L. M., & Banks, W. A. (2008). Animal-assisted therapy and loneliness in nursing homes: use of robotic versus living dogs. Journal of the American Medical Directors Association, 9(3), 173-177.

[https://doi.org/10.1016/j.jamda.2007.11.007]

- Baska, M. (2022, Feb 23). Apple adds gender-neutral Siri option voiced by queer person. Meet 'Quinn'. Thepinknews. Retrieved Jun 14, 2023 from https://www.thepinknews.com/2022/02/23/apple-gender-neutral-siri-voice-quinn/.

-

Brescoll, V. L., Dawson, E., & Uhlmann, E. L. (2010). Hard won and easily lost: The fragile status of leaders in gender-stereotype-incongruent occupations. Psychological Science, 21(11), 1640-1642.

[https://doi.org/10.1177/0956797610384744]

-

Eagly, A. H., & Wood, W. (1999). The origins of sex differences in human behavior: Evolved dispositions versus social roles. American psychologist, 54(6), 408.

[https://doi.org/10.1037/0003-066X.54.6.408]

-

Eagly, A. H. (2013). Sex differences in social behavior: A social-role interpretation. London: Psychology Press.

[https://doi.org/10.4324/9780203781906]

- Fournier-Tombs, E. (2021, July 14). Apple's Siri is no longer a woman by default, but is this really a win for feminism? The Conversation. Retrieved March 11, 2023 from https://theconversation.com/apples-siri-is-no-longer-a-woman-by-default-but-is-this-really-a-win-for-feminism-164030.

-

Eyssel, F., & Hegel, F. (2012). (s) he's got the look: Gender stereotyping of robots 1. Journal of Applied Social Psychology, 42(9), 2213-2230.

[https://doi.org/10.1111/j.1559-1816.2012.00937.x]

-

Gill, R., & Orgad, S. (2018). The shifting terrain of sex and power: From the 'sexualization of culture'to# MeToo. Sexualities, 21(8), 1313-1324.

[https://doi.org/10.1177/1363460718794647]

- Google. (2023). Set up Google Assistant on your device. Retrieved October 23, 2023 from https://support.google.com/assistant/answer/7172657?hl=en&ref_topic=7658198&sjid=15688186558215648265-AP.

-

Hwang, G., Lee, J., Oh, C. Y., & Lee, J. (2019, May). It sounds like a woman: Exploring gender stereotypes in South Korean voice assistants. In Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems (pp. 1-6).

[https://doi.org/10.1145/3290607.3312915]

- Kim, H. (2021, January 11). CEO says controversial AI chatbot 'Luda' will socialize in time. The Korea Herald. Retrieved January 7, 2024 from https://www.koreaherald.com/view.php?ud=20210111001051.

-

Lee, H. E. (2018). Why do voice activated technologies sound female?: Sound technology and gendered voice of digital voice assistants. Korean Association For Communication & Information Studies, 90, 126-153.

[https://doi.org/10.46407/kjci.2018.08.90.126]

-

Loideain, N. N., & Adams, R. (2020). From Alexa to Siri and the GDPR: the gendering of virtual personal assistants and the role of data protection impact assessments. Computer Law & Security Review, 36, 105366.

[https://doi.org/10.1016/j.clsr.2019.105366]

-

Luger, E., & Sellen, A. (2016, May). "Like Having a Really Bad PA" The Gulf between User Expectation and Experience of Conversational Agents. In Proceedings of the 2016 CHI conference on human factors in computing systems (pp. 5286-5297).

[https://doi.org/10.1145/2858036.2858288]

- Marr, B. (2023, April 28). The Amazing Ways Duolingo Is Using AI And GPT-4. Forbes. Retrieved November 9, 2023 from https://www.forbes.com/sites/bernardmarr/2023/04/28/the-amazing-ways-duolingo-is-using-ai-and-gpt-4/?sh=3d3220cb1346.

-

Nass, C., Steuer, J., & Tauber, E. R. (1994, April). Computers are social actors. In Proceedings of the SIGCHI conference on Human factors in computing systems (pp. 72-78).

[https://doi.org/10.1145/191666.191703]

-

Nass, C., Moon, Y., & Green, N. (1997). Are machines gender neutral? Gender-stereotypic responses to computers with voices. Journal of applied social psychology, 27(10), 864-876.

[https://doi.org/10.1111/j.1559-1816.1997.tb00275.x]

-

Nass, C., & Moon, Y. (2000). Machines and mindlessness: Social responses to computers. Journal of social issues, 56(1), 81-103.

[https://doi.org/10.1111/0022-4537.00153]

- Pastrovich, J. (2017, September 13). Want your kid to be bilingual? Alexa could help. Quartz. Retrieved March 11, 2023 from https://qz.com/1074540/want-your-kid-to-be-bilingual-alexa-could-help.

- Powers, A., Kramer, A. D., Lim, S., Kuo, J., Lee, S. L., & Kiesler, S. (2005, August). Eliciting information from people with a gendered humanoid robot. In ROMAN 2005. IEEE International Workshop on Robot and Human Interactive Communication, 2005 (pp. 158-163). IEEE.

-

Robertson, J. (2010). Gendering humanoid robots: Robo-sexism in Japan. Body & Society, 16(2), 1-36.

[https://doi.org/10.1177/1357034X10364767]

- Samsung. (2023). Customize Bixby Voice on your Galaxy phone or tablet. Retrieved October 23, 2023 from https://www.samsung.com/us/support/answer/ANS00076751/.

-

Sarrasin, O., Gabriel, U., & Gygax, P. (2012). Sexism and attitudes toward gender-neutral language. Swiss Journal of Psychology.

[https://doi.org/10.1024/1421-0185/a000078]

- Schwartz, H. E. (2023, June 30). Dutch Generative AI Startup Neople Raises $1.65M. Voicebot.ai. Retrieved November 9, 2023 from https://voicebot.ai/2023/06/30/dutch-generative-ai-startup-neople-raises-1-65m/.

- Sharma, R. (2023, July 11). SKT Unveils Major UX Overhaul of its AI Service 'A.'. TheFastMode. Retrieved November 11, 2023 from https://www.thefastmode.com/technology-solutions/32680-skt-unveils-major-ux-overhaul-of-its-ai-service-a.

- SK Telecom. (2019). NUGU Service Manual. Retrieved March 11, 2023 from https://www.nugu.co.kr/static/service/.

-

Søndergaard, M. L. J., & Hansen, L. K. (2018, June). Intimate futures: Staying with the trouble of digital personal assistants through design fiction. In Proceedings of the 2018 designing interactive systems conference (pp. 869-880).

[https://doi.org/10.1145/3196709.3196766]

-

Swim, J. K., Aikin, K. J., Hall, W. S., & Hunter, B. A. (1995). Sexism and racism: Old-fashioned and modern prejudices. Journal of personality and social psychology, 68(2), 199.

[https://doi.org/10.1037/0022-3514.68.2.199]

- Tinsely, C. H., & Ely, R. J. (2018). What most people get wrong about men and women. Harvard Business Review. Retrieved March 11, 2023 from https://hbr.org/2018/05/what-most-people-get-wrong-about-men-and-women.

-

Torta, E., Werner, F., Johnson, D. O., Juola, J. F., Cuijpers, R. H., Bazzani, M., ... & Bregman, J. (2014). Evaluation of a small socially-assistive humanoid robot in intelligent homes for the care of the elderly. Journal of Intelligent & Robotic Systems, 76, 57-71.

[https://doi.org/10.1007/s10846-013-0019-0]

- Vincent, J. (2021). Google's AI reservation service Duplex is now available in 49 states. The Verge. Retrieved March 11, 2023 from https://www.theverge.com/2021/4/1/22361729/google-duplex-ai-reservation-availability-49-us-states.

-

Weisman, H., & Janardhanan, N. S. (2020). The instantaneity of gendered voice assistant technology and manager perceptions of subordinate help. In Academy of Management Proceedings (Vol. 2020, No. 1, p. 21149). Briarcliff Manor, NY 10510: Academy of Management.

[https://doi.org/10.5465/AMBPP.2020.21149abstract]

- West, M., Kraut, Rebecca., & Chew, H. E. (2019). I'd blush if I could: closing gender divides in digital skills through education. EQUALS and UNESCO. Retrieved January 7, 2024 from https://unesdoc.unesco.org/ark:/48223/pf0000367416?posInSet=4&queryId=N-EXPLORE-5525f982-dcbe-4e33-b8c3-f352d68b0f93.

-

Wilbourn, M. P., & Kee, D. W. (2010). Henry the nurse is a doctor too: Implicitly examining children's gender stereotypes for male and female occupational roles. Sex Roles, 62, 670-683.

[https://doi.org/10.1007/s11199-010-9773-7]

-

Xu, Y., & Warschauer, M. (2019, May). Young children's reading and learning with conversational agents. In Extended abstracts of the 2019 CHI conference on human factors in computing systems (pp. 1-8).

[https://doi.org/10.1145/3290607.3299035]

-

Yeon, J., Park, Y., & Kim, D. (2023). Is Gender-Neutral AI the Correct Solution to Gender Bias. Archives of Design Research, 36(2), 63-90.

[https://doi.org/10.15187/adr.2023.05.36.2.63]

-

Yong, A. G., & Pearce, S. (2013). A beginner's guide to factor analysis: Focusing on exploratory factor analysis. Tutorials in quantitative methods for psychology, 9(2), 79-94.

[https://doi.org/10.20982/tqmp.09.2.p079]